Current vehicles rely on two very powerful eyes (the ones that come with the human operator) to provide the majority of information needed for driving to the computer (the brain behind those eyes). But the cars of the future will be able to take advantage of many more eyes. Camera technology is advancing at an unprecedented rate. The inclusion of cameras in phones and other computing devices is driving their cost down. It is not outside the realm of possibility that fully autonomous self-driving vehicles of tomorrow will leverage tens if not hundreds of cameras organized around the vehicle to give its processors full awareness of the car’s surroundings.

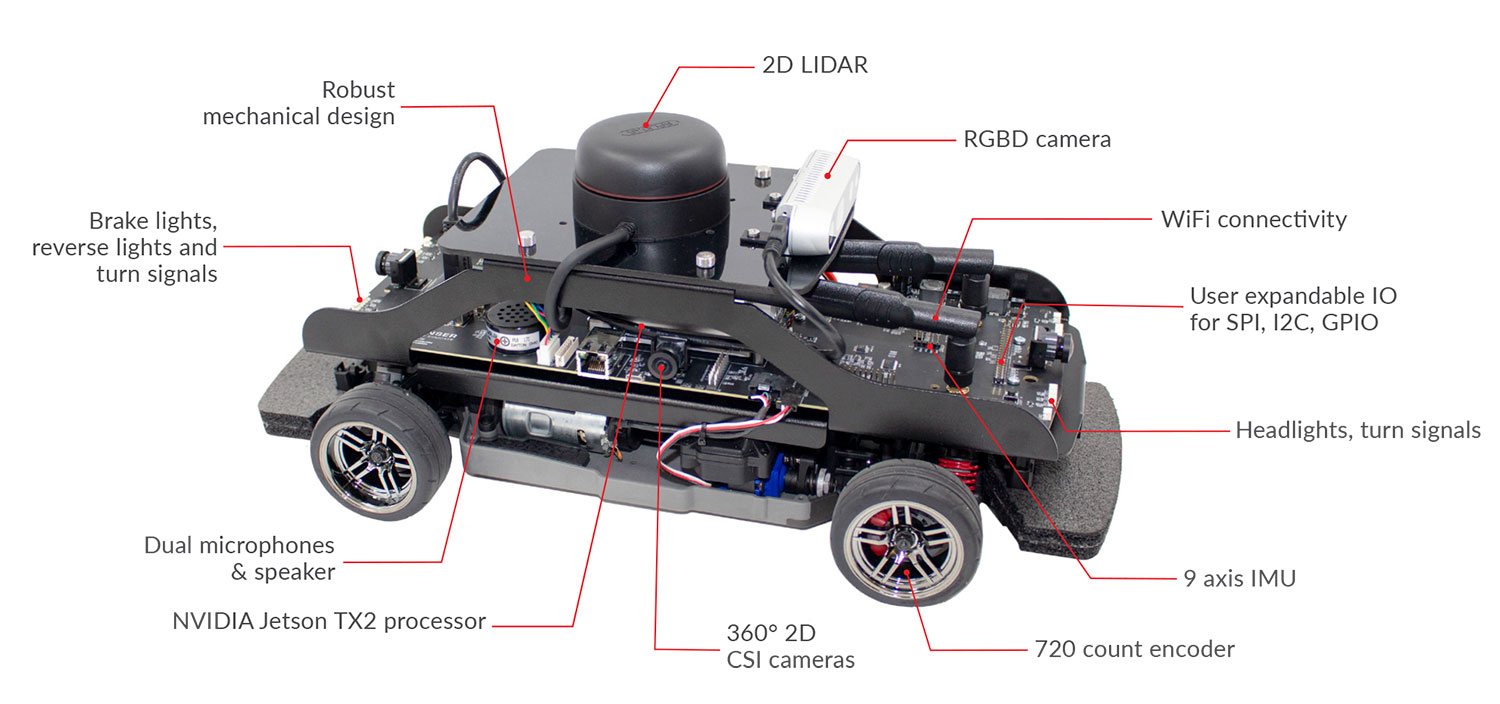

What I am going to talk about in this blog post is the current trend we at Quanser observed in self-driving car research: a focus on vision-based information, and how you can work on these types of applications using the Quanser QCar. If you did not read about the QCar before, it is a vehicle component of Quanser’s new autonomous vehicle platform. With academic researchers in mind, we did everything we could to make sure that we have instrumented it appropriately, optimized all the I/O and hardware at the lowest level to expose it to the software at the highest level. That’s part of our development philosophy with every product. In a nutshell, to enable you, the researchers, to be able to do anything you want to do and really push the envelope of what’s possible along the lines of self-driving research.

The Eyes of the QCar

As you can see from the image, the QCar is well-equipped for the vision-based applications. It comes with a front-facing Intel RealSense RGBD camera. Plus, we added four cameras around the vehicle that are directly piped into the GPU of the Nvidia platform to give you a full 360-degree view as well.

The RGBD camera on the QCar is a fairly recent Intel 435 release that provides high resolution, high frame-rate images. Here is an example of the RealSense camera use for a basic stop and go application. With the depth and RGB information, you can segment your image, find the stop sign, and then detect when you’re close to it:

While you can access the other four cameras positioned around the vehicle individually, you can also stitch their view into a wide video strip like the one in the clip below to be able to see 360 degrees around the vehicle:

So here’s a very quick video that shows how you can leverage both sets of cameras within the same application. The QCar is keeping the lanes by using the CSI cameras and then using the RGBD camera to also look for any distant obstacles in the way to stop the car:

How Does It Work?

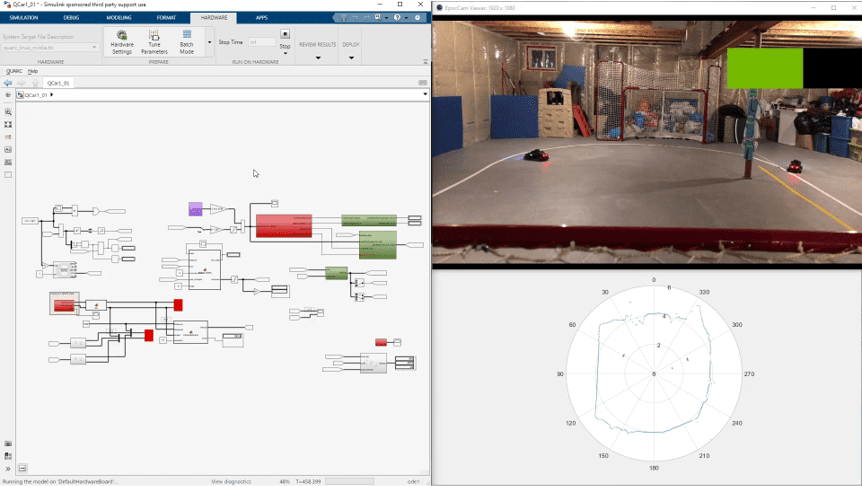

Let’s look closer at a similar type of application. As you will see in the video below, during the COVID-19 pandemic, I relocated to my basement and set up some of our studio roadways there to work on a steering application. My goal is to keep the car on the yellow line while looking ahead to predict where the road is going. The QCar is actually not doing a great job of that right now but that is the power of this research studio. You can deep-dive into any application and iterate on the algorithms all the while leveraging the model-based design and rapid controls prototyping techniques that Simulink is known for!

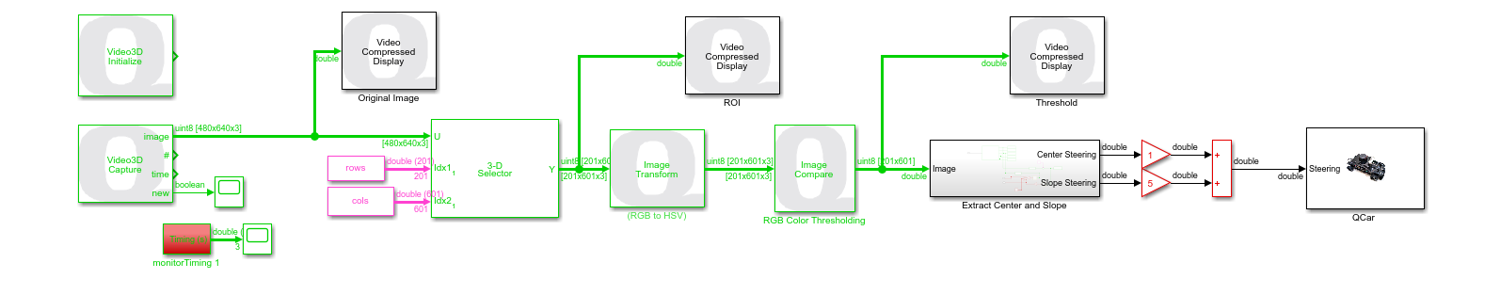

The lane-keeping algorithm that I used is fairly straightforward. I am using image capture blocks in QUARC, Quanser real-time software that allows running a Simulink diagram on real hardware in real-time, and while it’s running, you’re getting all of the image streams that I was showing earlier compressed in display blocks. Basically, the QCar is processing the full algorithm on the vehicle itself, and then streaming the information to a user interface on my PC.

Lane-Keeping Algorithm

The algorithm is capturing a 640 x 480 image, displaying the original image. I’m also selecting a region of interest, in this case, the bottom third of the image as I’m mostly interested in detecting the lines. I’m then transforming it from the RGB space to the HSV space, as it is a little bit easier to threshold and distinguish different colors and parameters in that space. Then we do simple thresholding where I’m just looking for the yellow line.

A binary image is generated after the thresholding block, and we’ve written a couple of algorithms that extract the position of the line center from the binary image. We also try to look for what the slope of that line is, i.e., the angle of the turn. Finally, I’m putting different weights on the line center and the line slope. I want the QCar to go a bit faster, so I put most of the weight on the upcoming turn that the car is seeing. This is a simple example, my next step is making it more dynamic, meaning the faster I go, the further ahead I want to look to keep the car on a certain path while predicting or looking ahead to see where the road is going and making decisions based on that as well. So some more development to come!

From Real to Virtual Hardware

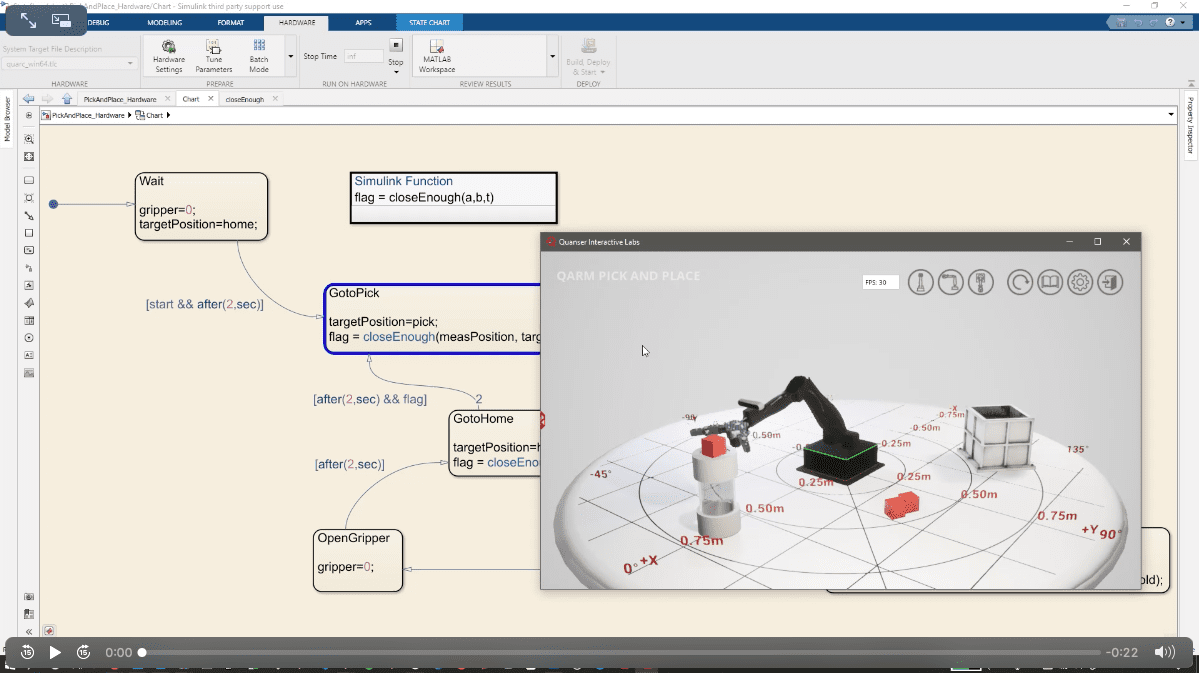

Another interesting thing I want to show is our new virtual hardware platform, Quanser Interactive Labs. I have taken the lane-keeping algorithm and applied it directly to the QLabs Virtual QBot 2e, a digital version of our QBot 2e system, an entry-level mobile robot, sort of the introductory version of the QCar. I am able to target the virtual robot with the exact same algorithm I just used for my physical QCar, which perfectly illustrates the open architecture design of all our platforms.

Coming Soon…

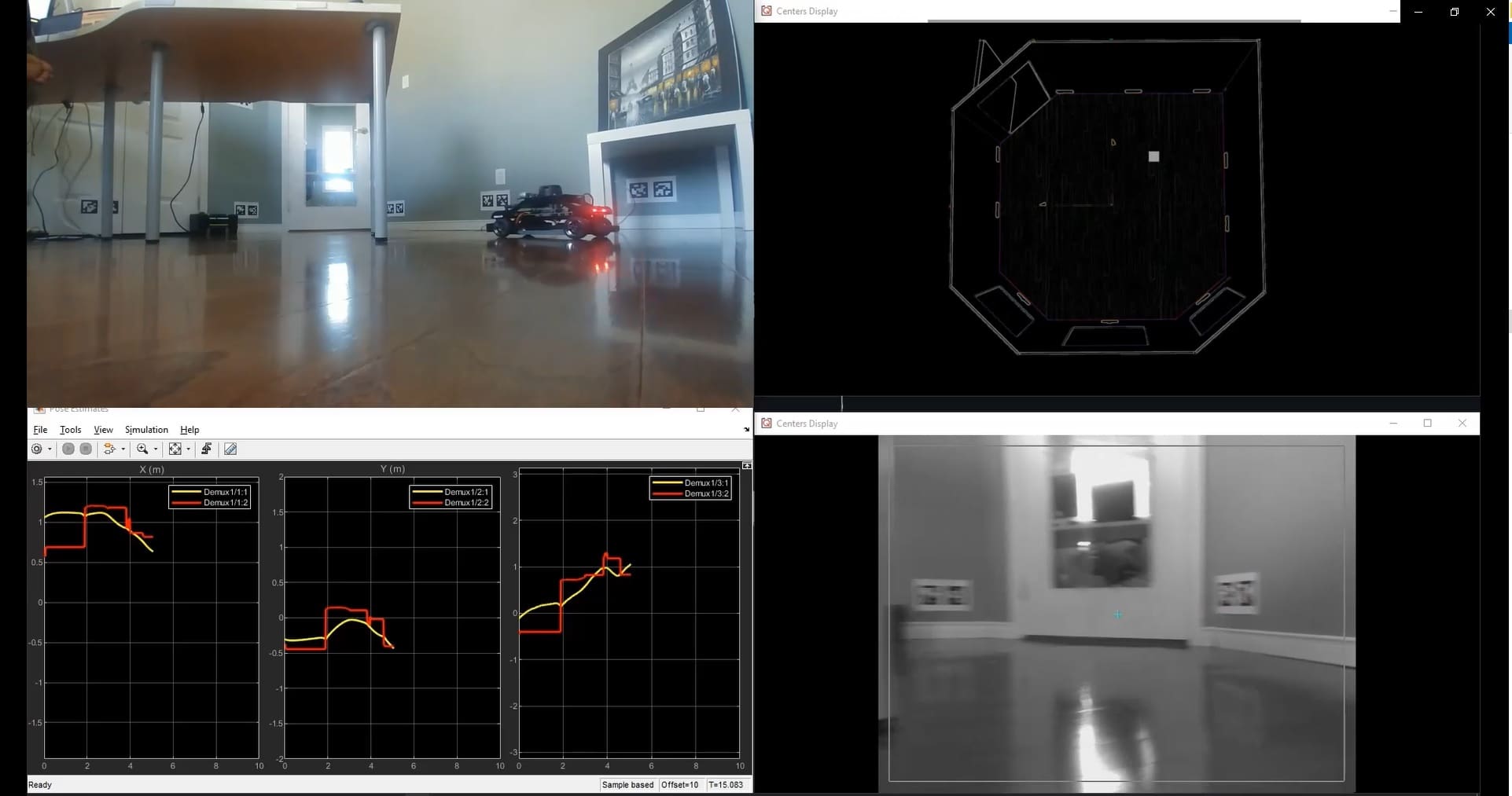

And I want to end with a video showing some work in progress leveraging mixed virtual and real environments.

In the top left, you see the actual QCar driving in an open space, and we are localizing where the car is within a space. Once we have the localization information, we can augment it in a mixed reality fashion. The car sees the real information, plus we are injecting the virtual environment within a sensor stream as well. So again, still a work in progress, but we’re very excited about where this type of technology is going.

I hope you found this overview useful. We are not quite ready to fully replace those two super sensors (human eyes) and supercomputer (human brain) yet, but we are super excited about all the research and applications that can be developed using our Self-Driving Car research platform that will help propel us towards that goal much faster!