When we launched the Self-Driving Car Research Studio with its feature vehicle, a 1/10 scale QCar earlier this year, we promised a wide array of application examples, including, of course, a self-driving one. We have developed line-following applications before, and many of our products, from the Mechatronic Systems board to the Quanser Driving Simulator and QBot 2e ground robot, come with complete examples for it. But autonomous driving requires more than simply ‘staying on top of a line.’ This time, we needed to teach the QCar how to keep the lane.

Staying in the Lane

We started with turning the engineering area of our office into a mini drive range, laying down a complete 2-lane roadway about 30 to 40 m long. By the way, this ‘infrastructure’ is a part of our research studio. You can arrange fourteen road panels to create road scenarios with solid dividers, dashed dividers, with 3- or 4-way intersections and test your own algorithms for lane changes, turns, etc.

During the initial R&D phase, we just wanted to push the limits on how fast could QCar drive forward while staying in its lane. Spoiled by the freedom to tinker we have been provided with at Quanser and armed with the power of QUARC, we did not hesitate one bit when posed with this new problem and got into prototyping our algorithms from scratch.

Developing the Lane-keeping Algorithm

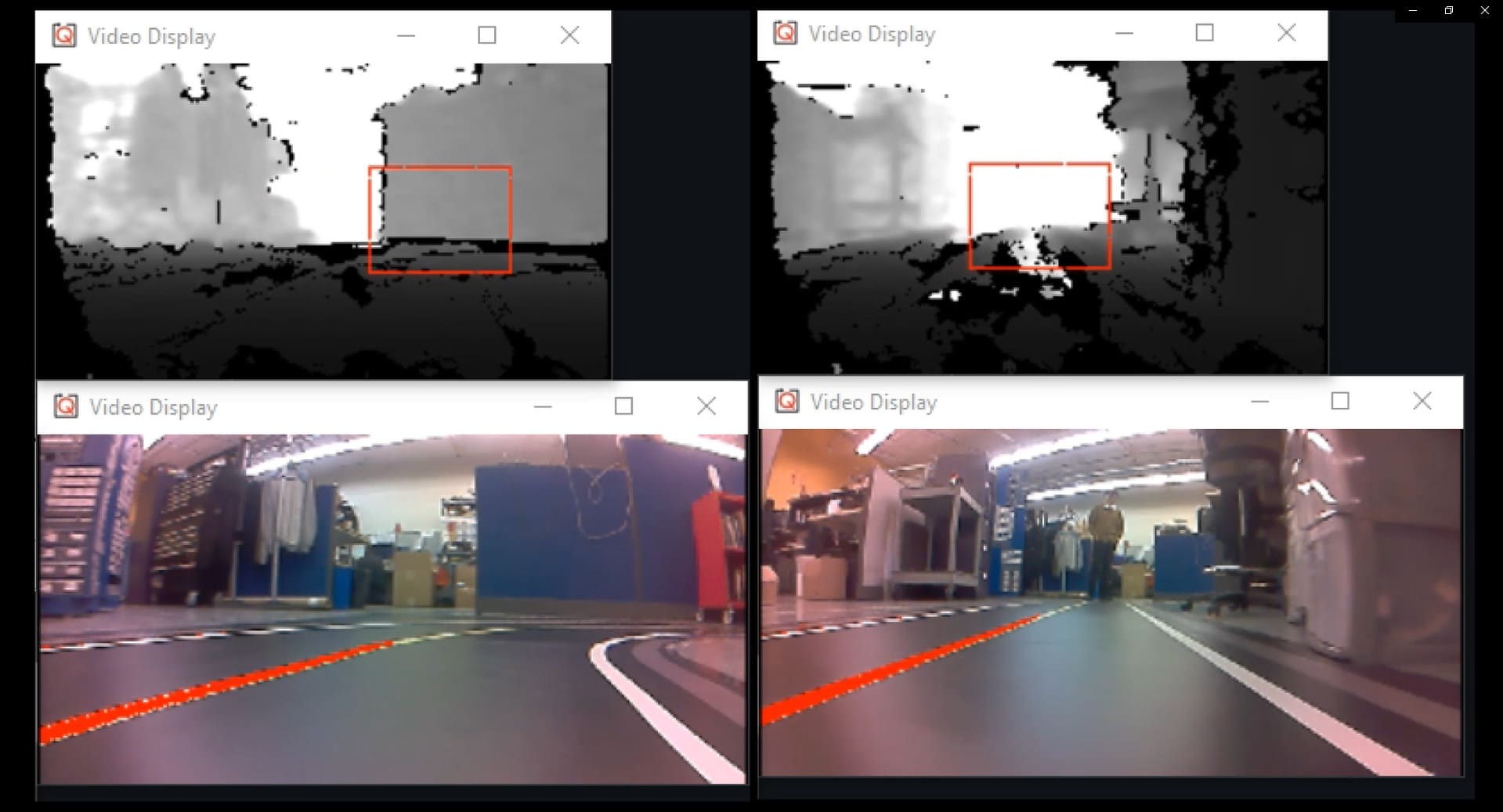

Starting with an image feed from our high-resolution front-facing CSI camera at 120Hz, we filtered it to extract information on the yellow lane markings, giving us a bitonal image where 1 represented the lane marking and 0 elsewhere. Running a linear polynomial fit on all the pixels in this image gave us the slope and intercept of the lane in the image coordinates (measured parameters). Knowing what the slope/intercept should be for a car sitting perfectly in the center of the lane (nominal parameters), we were able to have our car steer left/right proportional to the error between the nominal and measured parameters, with the steering and driving loops running at about 500Hz. Just like that, we were able to follow the lane. By tuning the nominal parameters, we were able to change the distance of the car from the lane markings. By changing the region of interest in the source image (from the CSI cameras) that we analyze, we were able to follow the left or right lane marking. By using both concepts together, we were also able to do smooth lane changes.

Changing lanes from outer to inner, and back

Adding Speed by Braking

Our R&D Director, Paul Karam, likes throwing challenges our way, and he wanted us to push the speed limits while driving autonomously. He recently wrote a blog post regarding advanced image processing algorithms, check it out.

In the video above, the QCar is driving at a measly 1 m/s. It’s a 1/10 scale platform though, so it would correspond to a full-scale speed of about 36 km/hr or 22 mph. We started pushing the speed of the platform and saw QCar failing at the turns. These weren’t arbitrary turns, they were decent turns at a 1/10 scale based on the real geometry of full-scale turn standards in Canada. What were we doing wrong?

The problem was braking, and we weren’t doing so. The QCar was trying to make the turns as fast as possible while maintaining speed. This speed setpoint couldn’t be any higher as the car would miss the turn. To solve this problem, we started modulating the speed command to our speed controller by multiplying it with the cosine of the steering command. As a result, the more the car tried to steer, the lower the speed set-point became, improving the car’s turning performance dramatically. We could now push up to 72 km/hr. And although that was pretty good, it still wasn’t 100 km/hr.

Looking Ahead

The last piece of the puzzle came with looking ahead. When driving ourselves, we do that all the time. We changed the vertical height of the region of interest in our source image based on the current speed of the vehicle. The faster it went, the further it looked ahead, and that did it. Now we were able to achieve 2.8 m/s, just over 100 km/hr or 60 mph. Of course, the car is making its way around what is basically a 400 m track to scale, and, to be fair, it does look a little dramatic. Notice in the bottom right video (the 100 km/hr one) that the red lane extends further out and away from the bottom of the image (greater number of rows being tracked) when the car is driving straight and fast. As it turns, the red lane being tracked seems to get shorter (the car starts looking closer to itself).

Autonomous driving at 72 km/hr (left column) and 100 km/hr (right column)

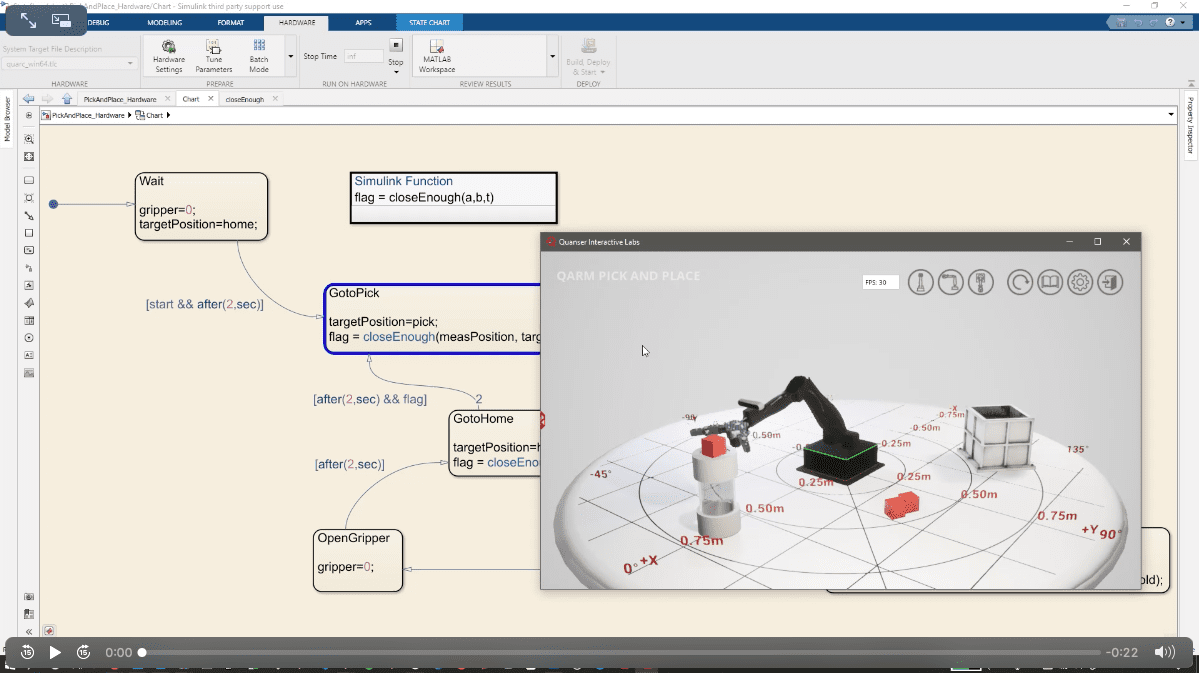

In about a month of tinkering with this algorithm, we got the QCar to not only stay in lane at blazing speeds, it could now also change lanes upon a high-level user command such as ‘move to the other lane.’ On top of that, we used the QCar’s Intel RealSense depth camera at 90Hz to simultaneously detect obstacles and either track the speed of moving obstacles (such as another car, otherwise referred to as Adaptive Cruise Control) or even stop on a dime for stationary obstacles.

Obstacle detection and brake response

Over the last month, my colleague John Pineros worked on developing an even more stable lane keeping algorithm, leveraging MATLAB add-on toolboxes. He will tell you all about it in his own blog post soon! If you can’t wait, watch the webinar where he presented his work.

Where Can YOU Take Autonomous Driving?

Throughout my years at Quanser, I’ve learned to answer the question, “can it be done?” with “let’s try” and “we’ll find out soon enough.” Of course, correctly estimating the scope of a problem is indeed a job on its own, but the lack of hesitation and the comfort/confidence of trying something out from scratch is what engineering is all about. My point is: if our team can create a lane-keeping algorithm and push speed limits using rapid prototyping, a blank Simulink canvas, and existing benchmark technologies available through MATLAB – all within a month or two, imagine what you and your research team, being experts in your field, can achieve with a solution like this! Take a moment, just imagine.