In the earlier blog post, my colleague Derek touched on the ‘robot elephant in the room’ – ROS. Given the size of the research community developing their applications in ROS, we definitely wanted to give them this option with the Self-Driving Car Research Studio’s QCar. So, let me show you how it looks.

Setting up the Demo

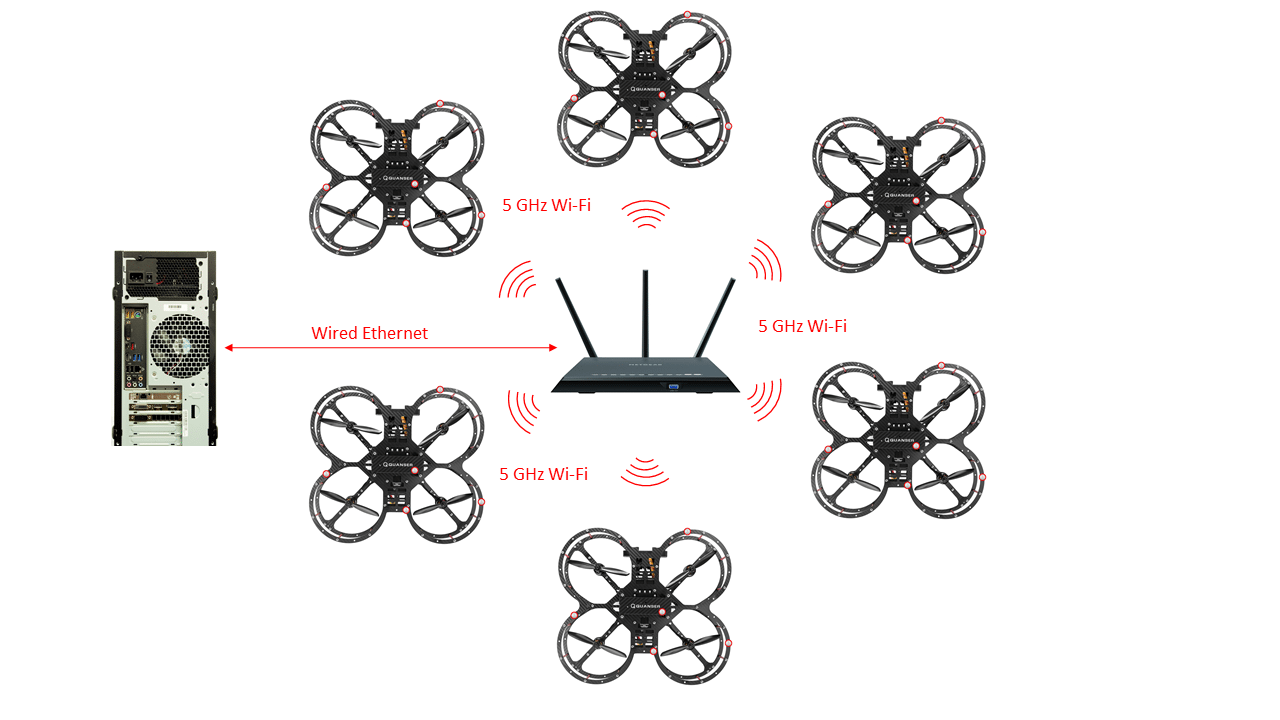

My example shows a typical application development with the QCar platform via ROS, where LIDAR maps the surrounding environment. I am using a general ROS structure with self-made ROS nodes and third party nodes actively talking with each other, i.e., sending and receiving data.

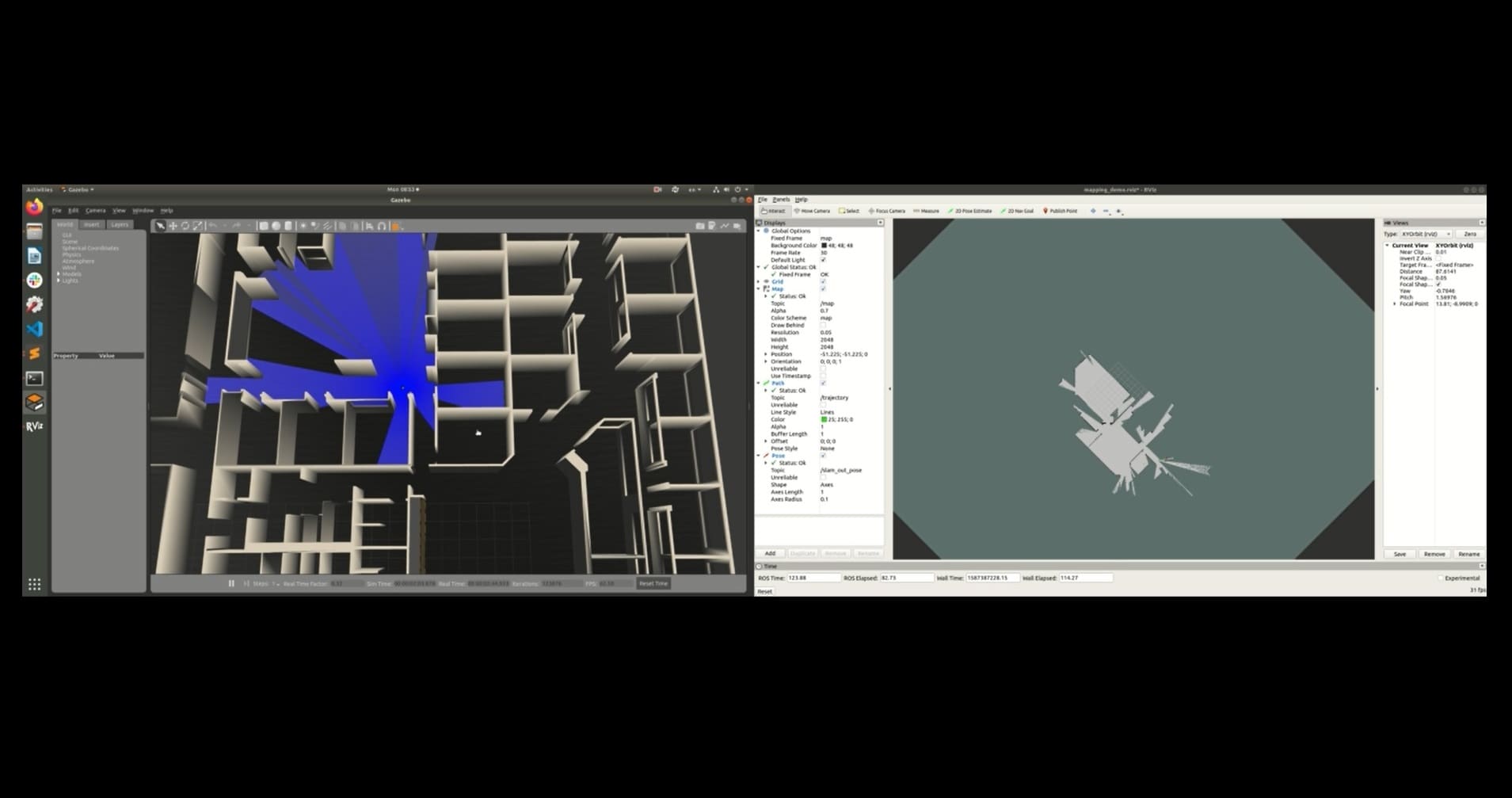

As you can see in the video below, I am using ROS Gazebo plug-in, RViz 3D visualization tool for ROS, and ackermann steering ROS package, where the ackermann node builds the connection between the simulation environment and the actual gamepad. Basically, the QCar Gazebo model subscribes to an ackermann command that is sent by the command node, and the sensors visualization is handled by RViz.

Launching Gazebo, the QCar model appears in the empty world. The blue rays are the LIDAR scanning regions, and also mean that the other components, like cameras, motor, and turning servo are functional. But with this initial setup, we have an empty space, and the LIDAR won’t detect any objects. So I added a few boxes and cylinders that the LIDAR can detect.

In the second terminal, I launched RViz. The terminal’s reference is the base of the QCar. That means that when I drive the QCar, inside RViz, it won’t move, but you can see the camera feed in the top-right corner of the terminal.

And lastly, my third terminal is for the command node.

Map generation with ROS

Once I added the objects to the Gacezbo environment, the camera feed in the RViz terminal is picking up the objects in front of the car. This camera feed is coming from the Intel RealSense camera on the top of the QCar. Just a side note, we also have four CSI cameras located at the front, back and on the sides of the QCar. But for this video, I opened up only one camera stream.

When I start driving the QCar using a gamepad, the QCar model subscribes to the Ackermann steering command that the gamepad control node is publishing. You can see the car is driving in Gazebo, mimicking the actual dynamic behavior of the QCar. In RViz, the camera feed and the point cloud data (the white dotted lines around the car) are updating as well.

The good thing about using the Gazebo simulation is that you can test your sensor or a self-driving algorithm before you apply it to the actual QCar. You can also test your SLAM applications in the Gazebo environment.