Artificial Intelligence has become more than a field, it has become a household acronym, “AI”. Social media can proliferate our impression and projection of the benefits and pitfalls of technology without ever capturing the essence of truth that comes from education and knowledge. As such, most individuals referring to AI today, are probably looking at it in the context of natural language processing (NLP) models, particularly ChatGPT. By no means am I implying that ChatGPT in itself isn’t useful to our civilization, or even the advancement of how we seek knowledge. However, I find myself uneasy and restless when I hear concerns about self-driving vehicles in relation to Artificial Intelligence, especially if the latter is in relation to natural language processing models. The propaganda of fear drives unnecessary thoughts like “the machines are taking over our jobs” and “Skynet is here and now!” I want to do my part, and provide some examples of where Artificial Intelligence can, and is being used in the self-driving industry, and my thoughts on the matter.

Increased Autonomy but not Intelligence

Introducing automation and increased autonomy to machines isn’t a new concept. Although the “first vehicle powered by a gas engine” was patented by Karl Benz in 1886, it took but less than 20 years for the first automatic transmission to be designed in 1904 by Sturtevant. Manual transmissions are more fuel efficient (anywhere from 2 to 20%), are cheaper to manufacture, lighter, better performing and simpler mechanical design, and yet, have a single complication – the driver has to operate a clutch and change a gear whenever required. The same goes with Cruise Control. Although used in vehicles as early as 1908, the modern cruise control was invented in 1948. Why use the accelerator and brake pedal to manually adjust to a desired speed, when the vehicle can do it for you, yet again, improving the experience by doing more for the driver.

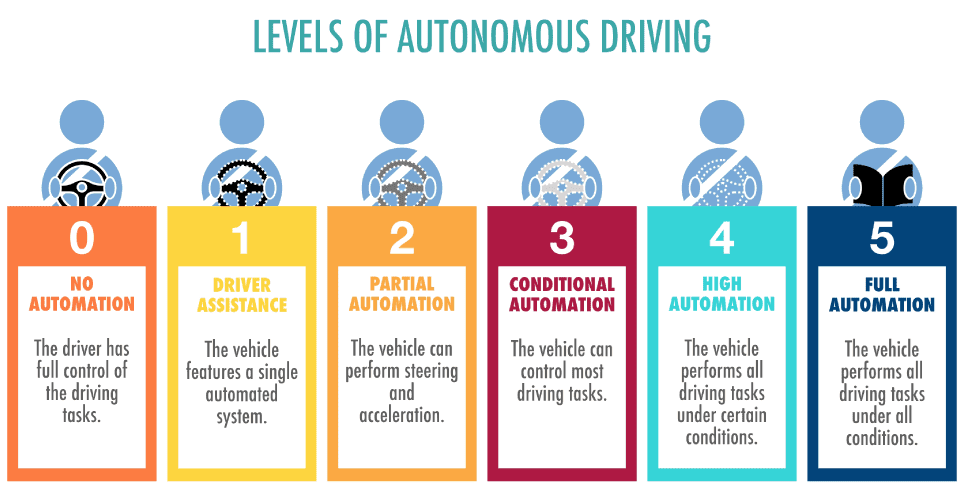

We place quite a bit of emphasis on improved driver experience, and having to do less is most definitely more for the average driver. Over the years, automobiles have seen an influx of features such as adaptive cruise control that changes your speed behind other cars, blind spot monitoring, rear cross traffic alert, lane departure warning, lane steering assist, automated parking brakes, the list goes on. And yet, there is a mixed bag of emotions and opinions when it comes to self-driving. The National Highway Traffic Safety Administration (NHTSA) dictates 6 levels of autonomy for vehicles. While all the features I just listed are those for a level 3 vehicle, one could argue they are pushing on level 4. I find the image below amusing – not only is the level 5 vehicle’s driver reading a book, there is no steering wheel.

The jump from level 4 to level 5 does not come easily. Increasing autonomy levels that pave the way to level 4 functionality makes the driver’s life easier, while level 5 functionality negates the need for a driver to begin with (hence, a book and the lack of a steering wheel). This is a fundamental shift in paradigm, making the problem not one of automation, but intelligence. We need to finally train a car to not only reduce the number of things the driver has to do, but also be the driver. While I personally love driving my own wheels, I can digest this argument better.

The jump from level 4 to level 5 does not come easily. Increasing autonomy levels that pave the way to level 4 functionality makes the driver’s life easier, while level 5 functionality negates the need for a driver to begin with (hence, a book and the lack of a steering wheel). This is a fundamental shift in paradigm, making the problem not one of automation, but intelligence. We need to finally train a car to not only reduce the number of things the driver has to do, but also be the driver. While I personally love driving my own wheels, I can digest this argument better.

Self Driving Intelligence

Once you are willing to accept that a self-driving vehicle is not taking over your freedom, but is fundamentally a different product category, we can proceed to ask how it can be done. The vehicle needs access to the information that a driver would take into account (and hopefully a lot more) and take actions accordingly. Taking actions is relatively a much simpler problem than perceiving information. This is mainly because the actuation interface is more or less the same – a pedal or two, and a steering mechanism. But it is critically difficult to capture what a driver perceives. Our ability to simultaneously be aware of the hundreds of cars, pedestrians, cyclists, squirrels, pets, road signage, traffic lights, potholes, storm drains, etc. and deduce whether or not any of them are going to be a problem is unparalleled by any machine or computer. Here’s how self-driving cars are technologically equipped with sensors to be able to see what we would see and more in any instant.

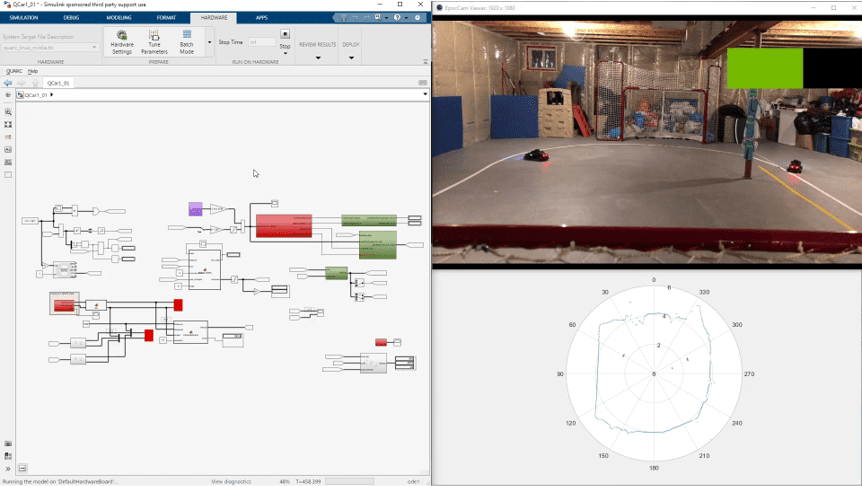

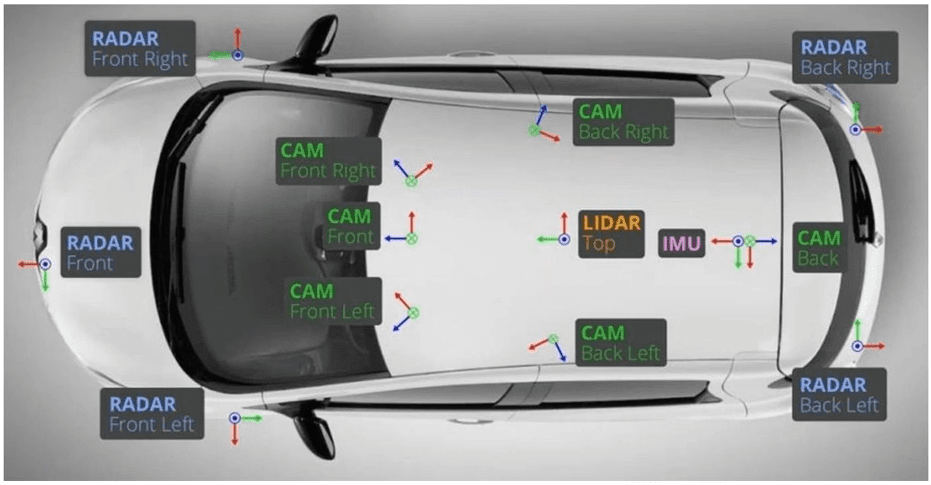

This is a very simple configuration, and yet already, it’s a lot more sensing than a pair of eyes on the average driver. The safety and reliability of a self driving vehicle also comes from redundancy and monitoring what multiple sensors are reporting to be true. Ranging sensors such as Radar and Lidar are critical in conditions that are too poor for visual sensors alone, for example, snow or fog or night-time conditions. A camera is a computer’s practical representation of a human eye, and so, the examples in this article are focusing on all that artificial intelligence models can do with cameras alone.

Perceptive Perception

Here are numerous examples of networks that are processing information within 2D representations of light on a plane from a 3D world, otherwise called an image. The task is often subcategorized depending on what it is you are trying to do with the image, so we’ll cover it in subsections.

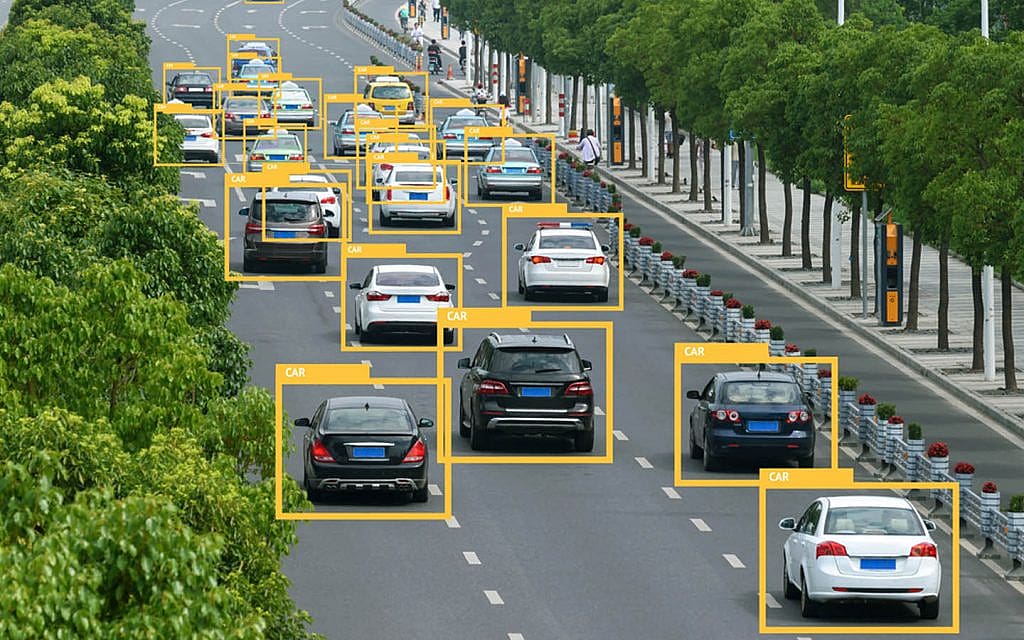

Object Detection & Tracking

The high level goal of this kind of processing is to find out what is in the scene, where it is, and at times, where is it moving within the scene. It helps distinguish cars, cyclists and pedestrians (dynamic obstacles) from parked vehicles, buildings and signage (static obstacles). The example below uses a Feature Pyramid Network (FPN) but other methods such as RCN, Fast-RCN and Faster-RCN also exist.

Flow Analysis

Another method of detecting the motion of objects is by using the relative motion of information within the image to estimate where obstacles are moving. This is an example of FlowNet, used to generate optical flow information in a self-driving scene. Optical flow is useful when you know the motion profile of the camera itself, so you can determine where the objects in the image are going.

Driving like a driver

While the two networks above provided information in regards to what was in the scene and where, this one is unique. PilotNet learns to directly correlate the information in an image to the steering actions that a human driver took in the same situation. Check it out in the video below. While this is great for rapid learning, how do you think one would address driver bias? Afterall, does a self-driving vehicle have to drive like a human? Food for thought.

Lane Analysis

Some alternate driving methods may consider finding out where the lanes in an image are, and trying to keep the vehicle centered in the lane without any human input. LaneNet does the first half of that work, by reporting lane markings found in the scene. Coupled with other networks and algorithms for steering (such as lateral Stanley Control), you’ve got autonomy.

What to bother about

This last example looks at categorizing the image information into buckets that make sense at a practical level. While a driver’s typical view (and a camera’s as well for that matter) includes the birds and trees and sky, they may not be relevant to immediate driving decisions. SegNet does this pretty well, coined “image segmentation”.

Where to from here?

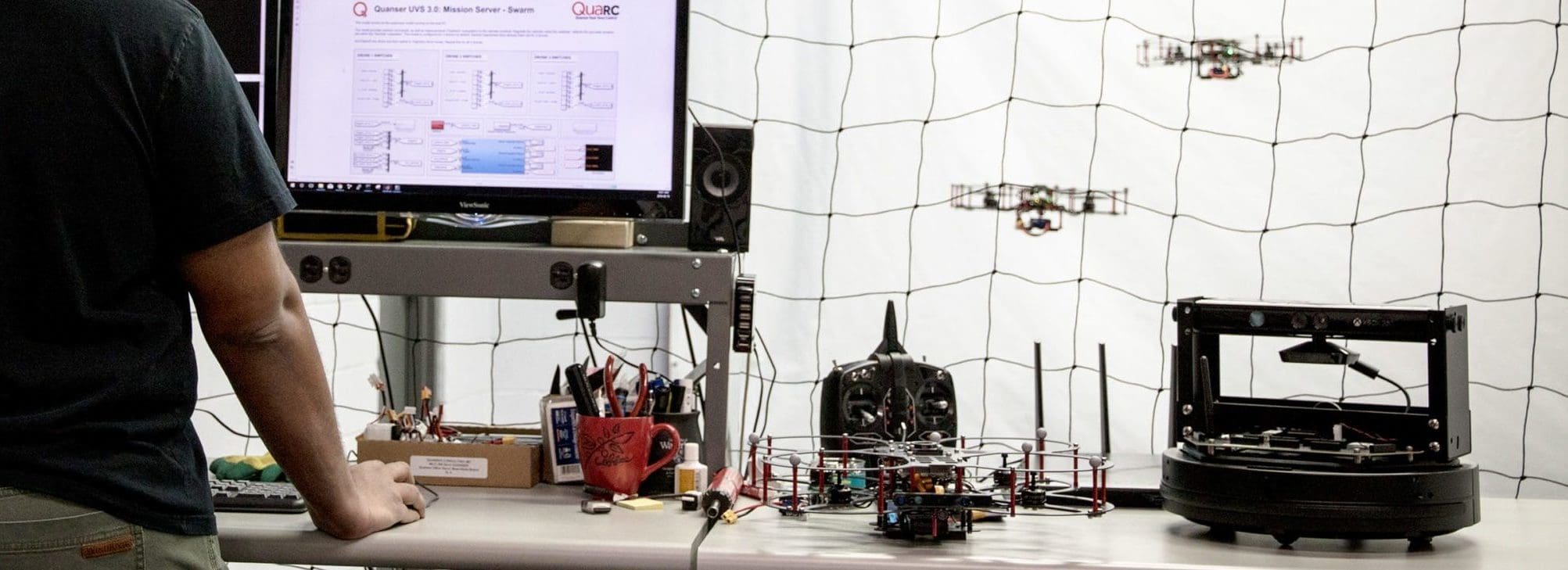

Some of the technologies that you may find in level 4 autonomous vehicles today might already be using models and networks such as these. There is a lot more coming in terms of self-driving technology and artificial intelligence. I definitely think there is a place for NLPs in self-driving as well, although I would personally prefer if no software sat between me and my hardware. If this blog has clarified some of the technologies revolving artificial intelligence for self-driving, be sure to follow us for upcoming updates to our Autonomous Systems and Applied AI offerings in the next few months. We have a ton of examples about to get released for mobile robotics and self driving, and simple intuitive APIs to introduce these concepts to your students and research lab.

Talk soon, Murtaza.

ABOUT THE AUTHOR

Murtaza Bohra is the R&D Manager for the Academic Applications team at Quanser, which develops teaching content and research examples to accompany our systems. Over 8 years, he has been involved in developing flight controllers for the QDrone research quadcopter, autonomous applications for Quanser’s self-driving vehicle QCar, as well as undergraduate teaching content for the QArm manipulator. He has also represented Quanser globally in workshops, tradeshows, plenary talks, and conferences, has presented numerous Quanser webinars and authored a variety of blogs. Murtaza holds a Master of Applied Science in Space Mechatronics and a Bachelor of Applied Science in Engineering Science from the University of Toronto, where he has taught a graduate course on manipulator robotics as well.