Welcome back to the second post in this blog series, covering the development of our Quanser Self-Driving Car Research Studio. My first post looked at the hardware aspects of QCar, the feature vehicle of the studio. Today, I would like to focus on software aspects.

Admittedly, the software side isn’t quite as flashy as talking about the hardware, but stick with me, because the software is at the core of making all the magic happen. (Don’t tell our software guys I said that though, or it might go right to their heads!) So let’s look at some exciting new software additions – and maybe a few things you didn’t know that our software can already do.

QUARC: Bigger on the Inside

At the heart of all our products is our software, called QUARC. Many of our products are focused on teaching or researching various aspects of control theory, so traditionally, we present this using Mathworks Matlab/Simulink. Simulink allows you to graphically draw control diagrams that are exactly the same as what you might see in a textbook. QUARC enhances the Simulink library to bring new, real-time functionality – there are over 400 blocks available in QUARC.

QUARC uses the code generation capabilities in concert with our custom cross-compilers to automatically build and deploy code to remote targets (the NVidia TX2 in the case of the QCar). Once deployed, QUARC enables Simulink to provide you with real-time data, as well as real-time parameter changes to your vehicle. Finally, and probably most importantly, QUARC provides a level of hardware abstraction which improves and promotes code reusability, but I’ll come back to that in a bit.

Autonomous vehicles span many different aspects of engineering and computer science, and not everyone lives in the Matlab/Simulink world, but this is just one piece of QUARC. Did you know that QUARC also provides support in Java, C, C++, C#, and VB? It is a very powerful collection of tools and application programming interfaces (APIs). The QUARC codebase has over 3 million lines of code, and that doesn’t even include all of our custom cross compilers! That is more code than the space shuttle, the F-22 Raptor, or the Hubble Space Telescope have!

The “New” Hot Programming Language

If you’re already working in the autonomous vehicle space, then Python is an obvious omission to the list of languages QUARC supports. Despite being almost 30 years old, Python has seen a massive surge in popularity in the last five years. Particularly in the fields of artificial intelligence and machine learning, it is dominating the field. That’s why, as a key part of the Self-Driving Car Research Studio project, we are adding support for Python 3 to QUARC.

But wait! you cry. What about Python 2.7!? Well, with that version scheduled to be “sun-setting” on January 1st, 2020, many of the latest APIs only provide Python 3 support. So we opted to move directly to that version.

Python on the Radar

QUARC is compartmentalized into many sub-libraries, but there are three in particular that we chose to wrap for Python support: HIL, Stream, and Media:

- HIL (Hardware In the Loop)

The HIL API is one of the foundational APIs of QUARC. Remember the hardware abstraction feature I mentioned being a key feature of QUARC? With our HIL API, you begin by calling our HIL Open function and specify what piece of hardware you’re talking to. After that, we have function calls to read analog inputs, write PWM outputs, set encoder counts, etc. So, let’s say you previously wrote an algorithm for your QBot 2e ground robot that uses sensor fusion to combine IMU readings with wheel encoders to estimate your position. Now you want to move that algorithm to your brand-new QCar. All you need to do is change the parameter in your HIL Open that says you are now accessing a QCar instead of a QBot, and the rest of your code stays the same. QUARC provides you with a unified interface for every target, every platform, and every language.

Python will be a part of our beta release of QUARC 2020, with initial support for the QCar’s Ubuntu on the TX2, QBot 2e’s Raspbian on the Raspberry Pi, and Windows. That also means you’ll have access to all of our current data acquisition hardware that is supported on those platforms using Python.

- Stream

The Stream API is all about communications. If you want to customize your hardware to be specific to your research, you may need access to serial ports, SPI, or I2C. Like the HIL API, the Stream API unifies communications with a protocol-agnostic interface. This means that the TX2 SPI port can be accessed the same way as the high-performance SPI port we provide through the QCar’s custom hardware. Going cross-platform, the same code can then be used to access an SPI port on a QBot. With a few parameter changes, the same functions could talk to an I2C port. If you build a library of code to access custom sensors or other hardware, QUARC helps make your hardware-specific code more portable.Outside of the hardware protocols, the Stream API also supports pipes. This feature lets you create communication channels between separate processes running on your QCar with minimal overhead. Of course, you could code everything in a single process with the same language, but this gives you the flexibility to use multiple languages if you want. For example, you could use Python for the AI algorithm, Simulink for the motion controller, and C++ for image processing.The final core use of the Stream API is for networking with TCP/IP and UDP. In this area, there are plenty of options for Python already. Using communications for control usually means a lot of small, but time-sensitive packets (try the “nagle” option!). Using communications for video means moving a lot of data as efficiently as possible (and with eight possible video streams on the QCar, that’s gigabits of data per second!). The Stream API gives you access to lots of parameters so you can optimize each of your communication streams.

- Media

Speaking of video, with the release of the Self-Driving Car Research Studio and QCars, we will be making our Media API publicly accessible for the first time. Like our other APIs, there is hardware abstraction and unification. You can access the depth camera on the QCar with the same code that accesses the depth camera on the QBot even though under-the-hood, these cameras operate very differently. You could plug in a webcam into the QCar on the USB 3 ports and access it the same way you access one of our high-speed CSI cameras. Our software team optimized the Media API for each of our target platforms. In the case of the QCar, the 2D media functions leverage the ability to DMA the CSI camera data directly to the GPU to manage format conversions. With that, we can efficiently translate the raw camera data into other formats that are directly compatible with the language of your choice so you can get high-speed or high-resolution video from the 360-degree camera coverage.

The Robot Elephant in the Room

It’s also time to address the robot elephant in the room: ROS or Robot Operating System. While it’s around since the late 2000s, its explosive growth began in 2016 with the first alpha version of ROS 2 and interest from both Amazon and Microsoft in 2017. Although there are lots of options for robotic middleware, ROS has become a dominant player, with a dedicated branch of the research community developing their work around the ROS framework.

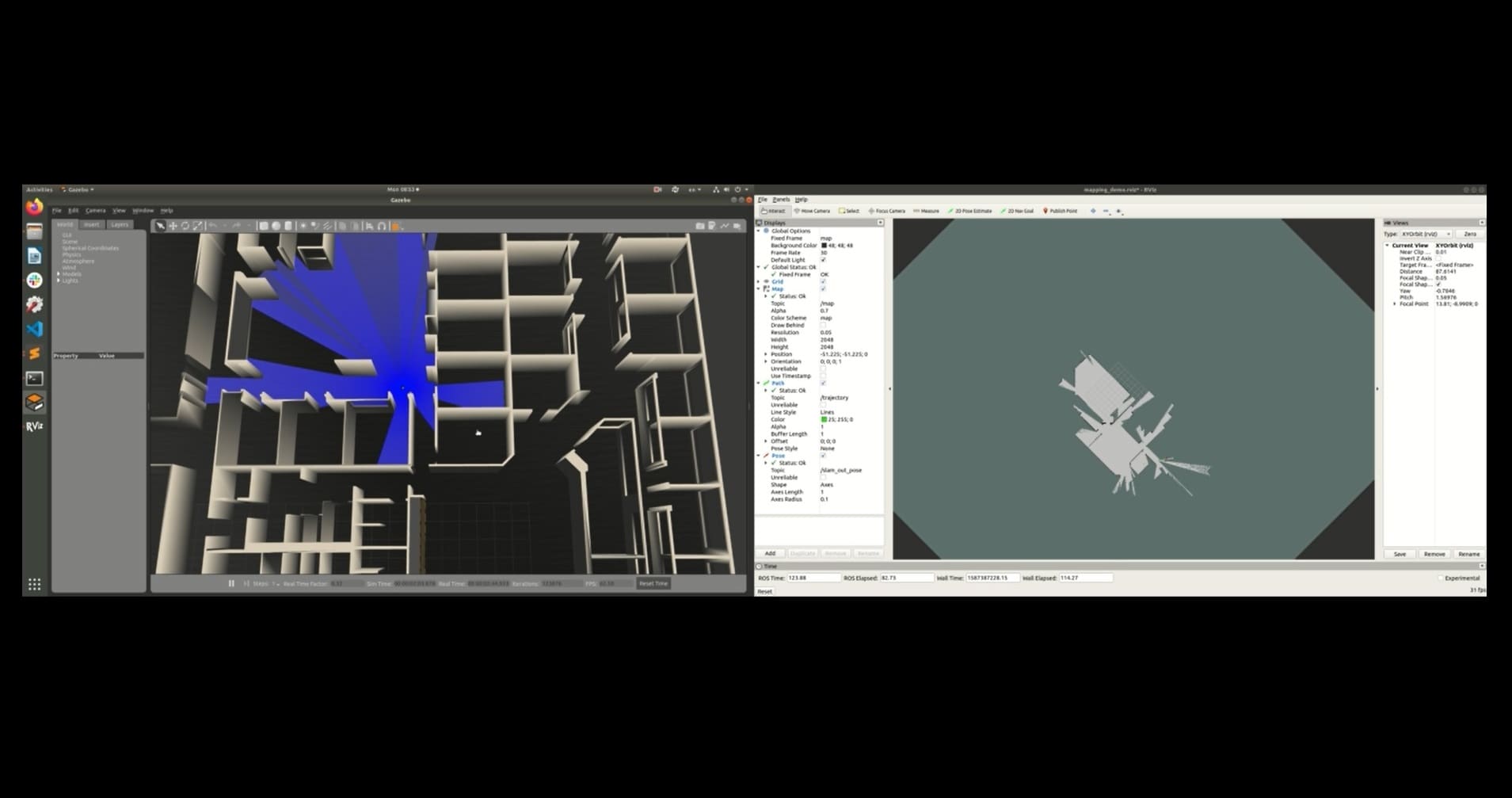

ROS isn’t really an operating system, but rather a collection of tools and API’s to interface with hardware at a low level and then communicate between heterogeneous devices. So what are we doing in ROS? We’re not quite ready to talk about the details yet other than to say it’s coming for QCar early 2020! With the addition of our new Python and C APIs to QUARC, you will have all the parts you need to pursue your own development. I promise to bring you more details soon.

More Simulation

A new feature released in QUARC 2019 SP1 back in October is the HIL Simulation. This simple yet powerful block lets you simulate any of our DAQs or devices. In the HIL Initialize, you would change the board identifier from ‘0’ to ‘0@tcpip://localhost:18000’, for example. Your controller would now be talking to a virtual device of your own design instead. When you write an analog out on a HIL Write, it will arrive at the HIL Simulation as an analog in. A digital out from the simulation block would arrive as a digital input on your HIL Read block and so forth. This is a great way to seamlessly switch between physical and virtual hardware.

A simulation doesn’t need to be a distinct switch between virtual and physical, though. In fact, simulation and hardware-in-the-loop are more of a spectrum. In the demo we showed earlier this year at ICRA, the QCar communicated to a remote simulation of the vehicle dynamics running on a PC with a virtual world created in Unreal Engine 4. Data from the virtual RGB and depth camera was transmitted to the QCar, so all the image processing was being done onboard the TX2. This controlled the physical drive motor and steering. The steering and measured wheel velocity and was then returned to the PC to control the virtual QCar completing the loop:

Going to the other extreme, the QCar could be driving around the physical world, but you could use augmented reality to inject virtual objects into the real data. We’ll show you more details of our augmented reality experiments and how this can be used with QCar in the coming months.

Coming Up…

At this point, our Self-Driving Research Studio is almost finalized. We still have another month or so of validating all the hardware, then we’ll start moving this over to our manufacturing team. Our software development team has done some amazing work to get us up and running and they’re still hard at work, adding more features. Our applications team has been hard at work, implementing image processing algorithms, testing AI, and creating different driving scenarios. Their goal is to give you a great starting point so you can implement, validate, and publish faster! In the next blog post, we’ll take a look at what they have been up to. Ultimately, our driving motivation (pun intended) is to give you the tools to do your research your way.

Don’t miss future Self-Driving Car Research Studio blog posts, news, and updates. Sign up to be the first one to get them!