Back Then…

In the late 2000s, there were no iPhones, no YouTube, no Arduinos or Raspberry Pi’s, the DotCom bubble was still growing, Google was still a new thing. You had to pay hundreds of dollars for a C compiler, and 90% of internet users were still on dial-up. Nevertheless, as an enthusiastic fourth-year engineering student working on my capstone project, anything was possible.

With three other team members, we were determined to demonstrate the possibilities of an automated highway system with 1/10 scale model cars. We were using cutting edge technology, but our project goals were quite different from what we think of as an autonomous car today – full scale or model.

The car followed a line using an array of six photodetectors connected to a microcontroller which ran a fuzzy logic engine we hand-coded in assembly. Speed limit “signs” were read with a simple implementation of a barcode scanner. Our high-level planning processor managed inter-car communications using an infrared serial connection (WiFi was too expensive) and it used a decision tree to coordinate vehicle actions. Every PCB had to be built from scratch, and every cable had to be made by hand. That was eight months of work.

And now…

Fast forward to 2019. I am now employed at Quanser. Early this year, we started talking about developing a new platform for researchers to study self-driving cars. As a first proof of concept, I dug out my vehicles from 19 years prior, ripped out all the electronics, and replaced them with a webcam, Raspberry Pi, and our QUARC Real-Time Control Software. We recreated 8 months of work in 2 days.

The idea of automated highway systems is valid now more than ever. The Highway 401 in Ontario is the busiest highway in the world, with more than half a million cars travelling on it every day, and far exceeding its design capacity. If we can take the humans out of the loop, even just on the highways, we could increase the traffic density and speed while reducing fuel consumption.

As this video by Johnathan Sprinkle at the University of Arizona demonstrates, even a small number of autonomous vehicles integrated with regular drivers can help to increase average travel speeds while reducing fuel consumption:

Helping Researchers Develop the Perfect Autonomous Car

So how do you make a perfect autonomous car? We don’t know, but we do have the expertise to provide researchers with a powerful and flexible platform for them to test their ideas. On the side, we’re going to test some of our own ideas too! Over the last six months, we have been hard at work developing our QCar platform.

If you are interested in this subject, stay tuned for my blog post series. Over the next few months, I plan to post regular updates about our progress and show you where we’re heading. Today, I’m going to start with focusing on the hardware aspects of our QCar.

The QCar Hardware

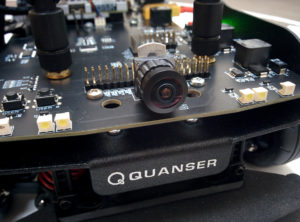

After many months of prototyping, we are happy to announce that we have just built our first alpha version! An alpha version means that all the fundamental design goals are now functional and we have solved all the technical challenges to turn this into a product. We still have a couple more iterations before it is ready for manufacturing and we lock down the design, but in the meantime we’re well into our software and applications development.

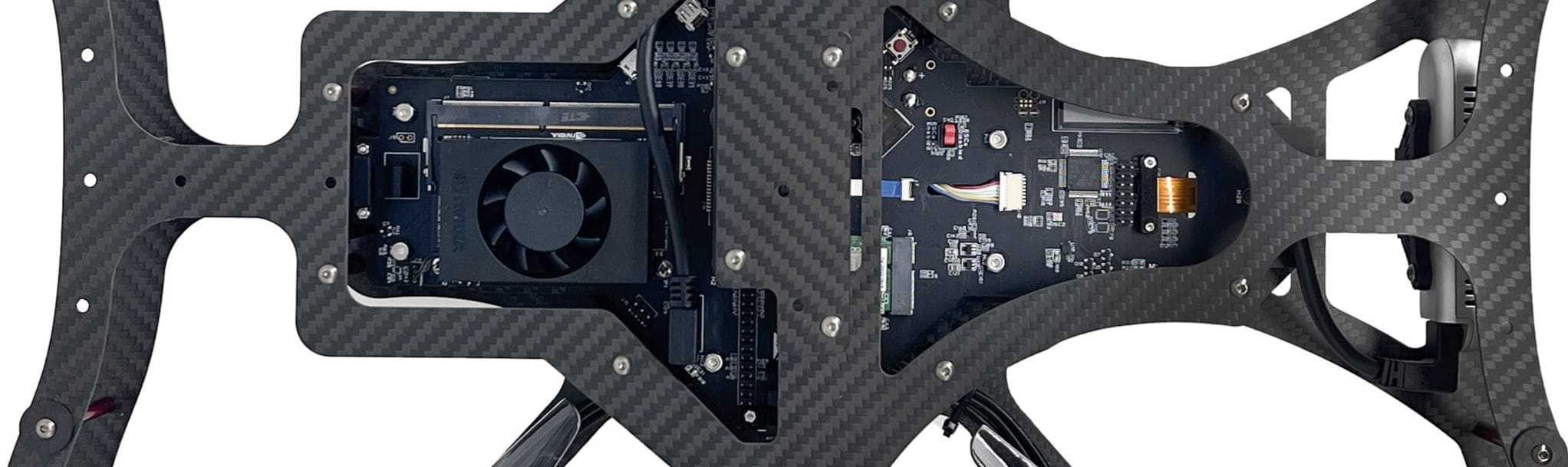

Serious GPU Power for Real-time Image Processing

At the core of the QCar, NVidia Jetson TX2 plugs into a custom PCB. For a small, mobile platform, this is some serious GPU power which supports real-time image processing and AI functions. As part of the supporting architecture, we have implemented a USB 3.0 hub so that researchers can add a variety of high speed devices. Included with the QCar will be an Intel RealSense D435 depth camera that will make use of the USB 3.

Vision and Navigation

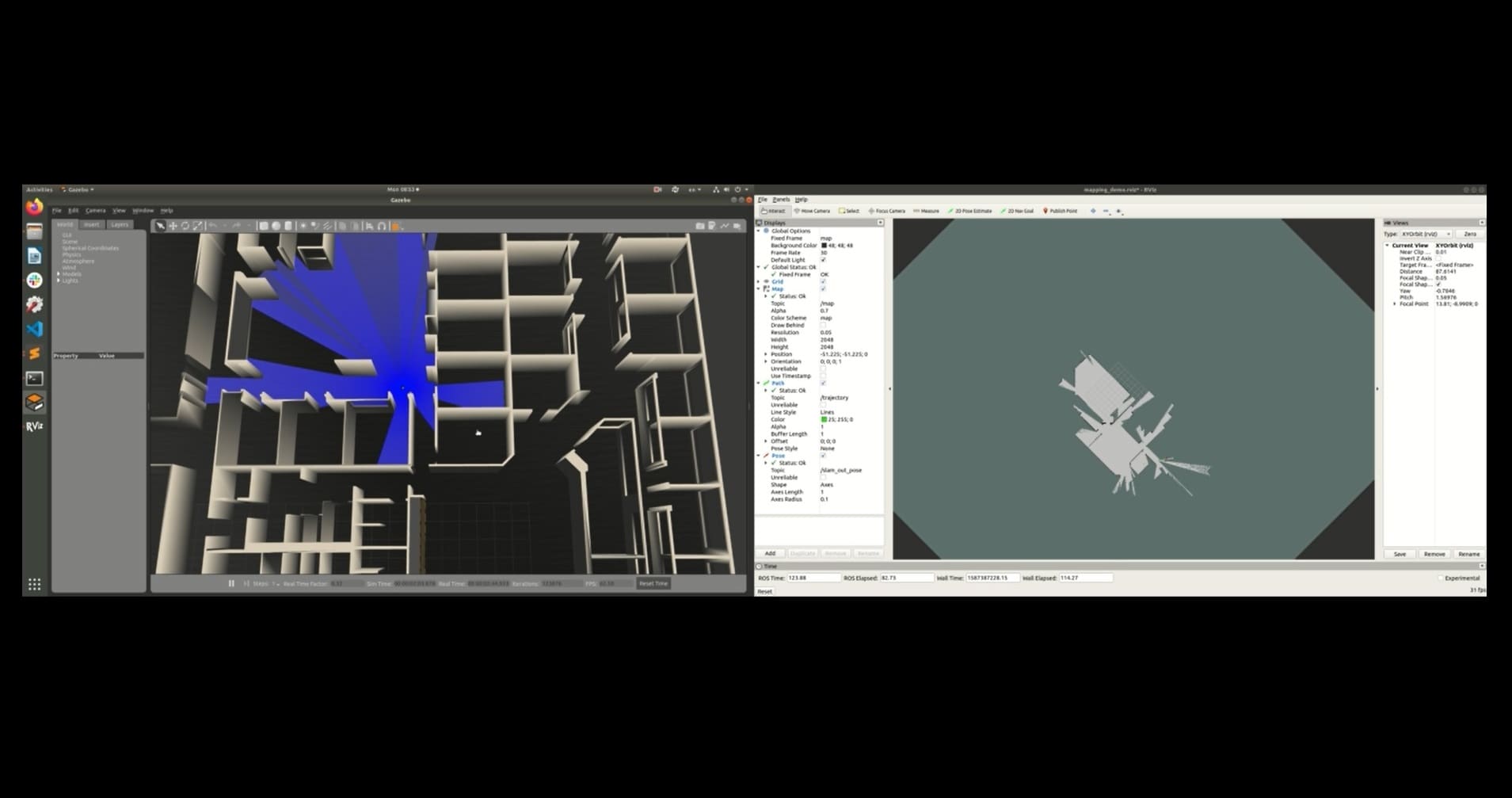

Another key aspect of the supporting architecture is a high speed CSI interface. The QCar will include 4 onboard, wide angle, CSI color cameras to give you 360 degrees of vision. These cameras can stream their data directly to the onboard GPU so you can get almost 4K resolution at 10 bits per pixel per camera. Alternatively, you can switch to lower resolutions and get 120fps! With the direct interface into the GPU, you can leverage the power of the CUDA cores with minimal latency.

We’ve also added a 2D LIDAR on top for 360 degree ranging. This can either enhance your visual processing with the CSI cameras, or you can navigate solely with LIDAR alone. We’ve already taken care of some of the basic functions of LIDAR processing so you can get smart-interpolated data in fixed or variable length arrays, or you can just get the raw data if you prefer to start there and build up.

Exploring the Use of Audio

One of our more experimental features at the moment is adding stereo microphones. This adds another dimension to sensor fusion. Could this be used to determine the surface material your car is currently travelling on and change the control response as a result? Could a response to audio cues of an emergency vehicle be formulated before it is visible? Could honking horns be spatially located to identify a future threat? To build on this area of exploration, our developers have also added a speaker to the vehicle to add to the possible vehicle response to of an impending threat.

Signals and Lights

On top of brake lights, turn signals, and reverse indicators, QCar also has headlights. Driving at night adds another facet to processing the driving environment. The field of view becomes more restricted at night using only headlights, image data becomes noisier, color information is more restricted, and oncoming traffic and street lights can create new challenges. With the QCar, you can test your image processing routines work under different lighting conditions to test the robustness of your algorithms, or try dynamically switching your processing models.

Before our society reaches a fully autonomous transportation infrastructure, self-driving cars will need to interact with those unpredictable human drivers. Since the processor can directly control all the lights, it’s just a small clip of code to correlate your signals with a game controller. We envision test scenarios where fully autonomous QCars interact with one or more human-driven QCars. By using cameras to evaluate the brake and turn signals of the human-driven vehicles, AI routines on the autonomous cars can try to evaluate the intentions of the human drivers.

And There’s More

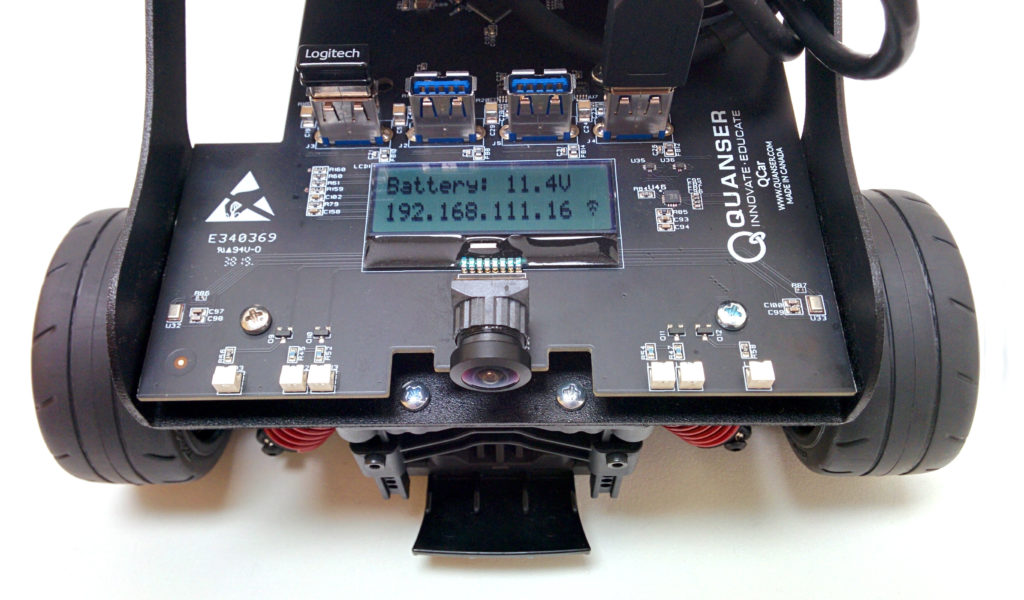

To round things out, we also have an encoder on the motor and current measurements so you can estimate the power consumption to quantify the efficiency of driving. The 9-axis inertial measurement unit can work in combination with optical flow and encoder measurements for dead reckoning in the absence of external tracking systems. And the LCD screen gives you the current IP address and battery levels and you can even write your own messages to the screen.

Not Enough? Customize!

Although we’ve tried to think up a lot of use cases, we know you will want to do more and customize the vehicles for your specific research. You can plug a keyboard, mouse, and HDMI cable directly into the vehicle so you have direct access to the Ubuntu operating system. We provide user-accessible communication ports for SPI, I2C, CAN bus, serial, Ethernet, and USB 3 ports so you can add more sensors and cameras. There are also general purpose digital IO, 4 user encoder channels with full quadrature decoding, and special PWM outputs that support general purpose PWM, standard servo PWM, DShot, Oneshot, and multishot. You could instrument each wheel on the vehicle if you like, or build a robot arm on top! Let us know in the comments below what you would like to do with a scale model of an autonomous research car!

Next time, I’ll tell you about the exciting software advancements we’re working on to support your research! Talk to you next month.