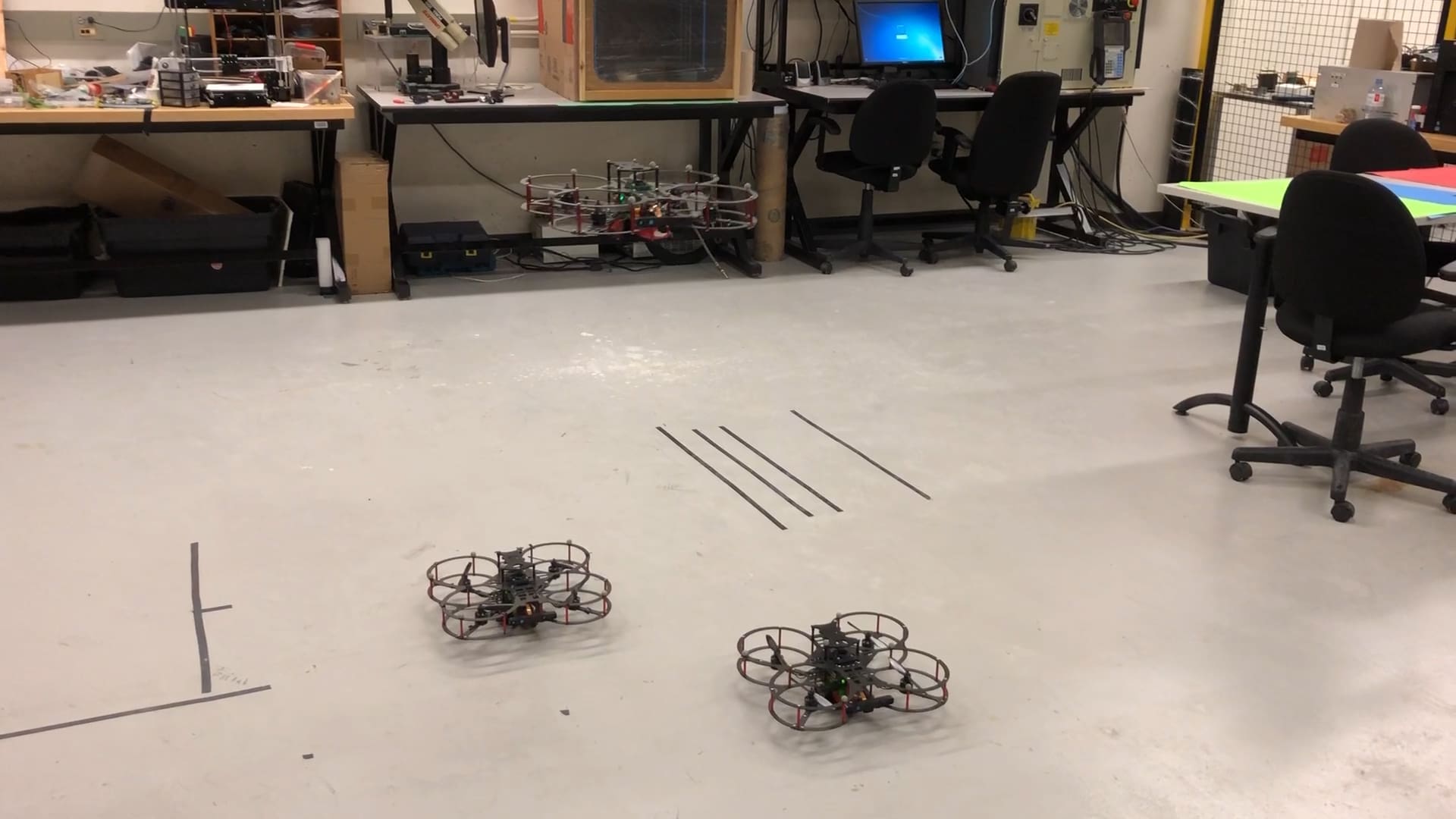

One of the best parts of my job as an R&D engineer at Quanser is meeting with our customers and learning how they use our systems in their teaching labs or for their research. Recently, I visited the Autonomous Vehicles Systems Lab (AVS) at the University of the Incarnate Word (UIW) in San Antonio, Texas. While it is a regional undergraduate-focused university, the AVS Lab is one of the biggest undergraduate research labs in the U.S. With the recent addition of the Quanser QDrones to their lab, I was curious to see what they are up to.

Drones monitoring power lines

As Dr. Mike Frye, Associate Professor and Director of the AVS Lab at UIW explained, his team is partnering with the local power company to help them monitor transmission lines. They want to deploy drones to fly to the transmission towers, take pictures or videos, and send them to the operation center for analysis. Having previous experience with the QBall quadrotors in their lab, the QDrone – a new generation of Quanser UAVs, fit perfectly with their needs. Here is a demonstration of their application:

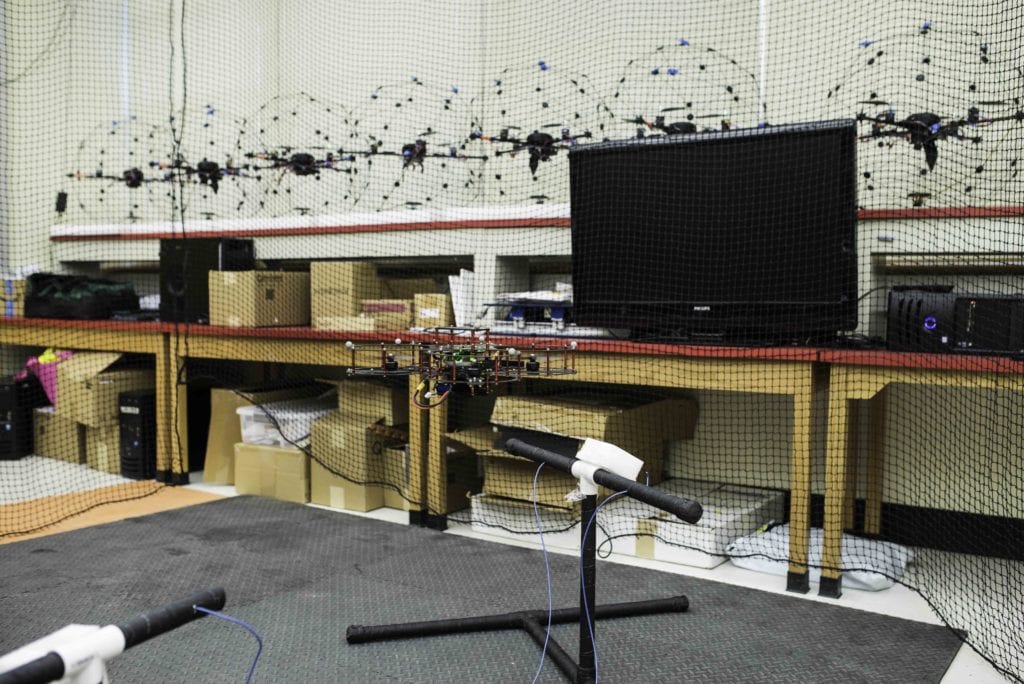

The Qdrone in the video is searching for a tower, in this case, a black PVC pipe structure with a piece of bright white foam placed on top. Once the QDrone takes off, it goes into a scanning mode, looking for the tower. The drone’s optical flow camera captures a gray-scale image of the environment. A binary threshold algorithm running onboard identifies bright pixel values corresponding to the piece of foam. Another blob-detection algorithm then finds all the blobs in the image, draws a box around them, and identifies the center of the box. The pixel coordinates of the center of the blob represent the tower’s location. The Qdrone sends these coordinates to the mission server running on the ground station PC.

Identifying the “tower” initiates the exploratory phase of the mission. The QDrone approaches the tower using visual servoing and takes pictures. With that accomplished, the drone returns to scan the environment, searching for the next tower. After repeating a certain number of scanning loops, the drone returns to its home position and lands.

Looking under the hood

All this may sound relatively simple, but there are a few issues the researchers at the UIW’s AVS Lab had to solve. For example, they wanted the whole mission to run autonomously. The QDrone is an autonomous vehicle, i.e., there’s a state machine running on its Commander module that takes care of all the flight states. However, in this case, the mission server running on the ground control station PC also had to be autonomous. That required introducing a second state machine on the mission server. With this setup, the QDrone communicated with the ground station, took off, performed the search & scan, returned, and landed. It completed all these tasks on its own, without any user input.

Right of the bat, we ran into an issue switching between the ‘scanning’ and ‘approach tower’ modes. Typically, circle (scanning) trajectories are generated as a sine and cosine of a time value, multiplied by the angular velocity. That’s relatively simple. But if you want to pause the trajectory (e.g., when the drone finds a tower), the model time does not pause for you. You can request the QDrone to hold its position, but the moment you resume, the QDrone will try and jump to the location the trajectory generator is at, instead of where the circle was paused. A pretty neat trick solved the problem. We used a trajectory generator in the frequency domain instead of the time domain.

Once this was taken care of, another issue popped up: the QDrone would identify a tower, move towards it. But then it would immediately return to the position where it was when it found the tower. We realized that the position controller on the QDrone was tuned aggressively for optimal motion performance. Banking quickly to move towards the tower ended up tilting the downward-facing optical flow camera to a point where it could not see the tower anymore. So we tuned down the gains to minimize banking angles, while still coasting slowly towards the tower.

Once this was taken care of, another issue popped up: the QDrone would identify a tower, move towards it. But then it would immediately return to the position where it was when it found the tower. We realized that the position controller on the QDrone was tuned aggressively for optimal motion performance. Banking quickly to move towards the tower ended up tilting the downward-facing optical flow camera to a point where it could not see the tower anymore. So we tuned down the gains to minimize banking angles, while still coasting slowly towards the tower.

However, even with tuning the gains, we noticed the same behavior all over again. The QDrone would oscillate between its original scanning position and the position over the tower. Although the symptoms were the same as before, this time, the cause was totally different. The QDrone simply moved to the tower, finished its task of capturing images/videos, and moved back to resume scanning. And it would immediately find the tower – unfortunately, the same one, and approach it again. To solve this issue, we turned off the QDrone’s camera for a few seconds after it returned back to a scanning mode. That way, it ‘turned a blind eye’ to the tower previously found. We also considered implementing a database, logging in the locations of known towers, but we left that for the future implementation.

As we continued scanning the lab space for the “towers,” we noticed another interesting behavior. In one particular corner, the QDrone would claim it has found a tower. But as it moved towards it, it would lose the tower and do nothing. In this case, it was the sharp ceiling lights reflecting on the floor not covered by the rubber matting tiles. The QDrone would pick the reflection as a tower marker, and try to move towards it. However, a physical constraint on the drone’s allowable workspace prevented it from ever getting to the target. It would just wait at the edge of the workspace indefinitely.

As everything was running autonomously, our only input to the system was to abort the mission. We shut off the motors and let the QDrone free-fall to the floor. That’s when its impact-resistant design proved itself again! The QDrone easily withstood all the crash-landings while we found a solution.

Speeding up the research

If you think all this finessing took us a month, I have to correct you. We finished in less than two days! The Autonomous Vehicles Research Studio comes with software packages, including pre-optimized stabilization and position control algorithms for the QDrone. The algorithms are very versatile, in fact, the drone simply has to receive information about where it is, and where it should go. With this architecture, the process of integrating the QDrone into the AVS Lab’s application was streamlined and efficient.

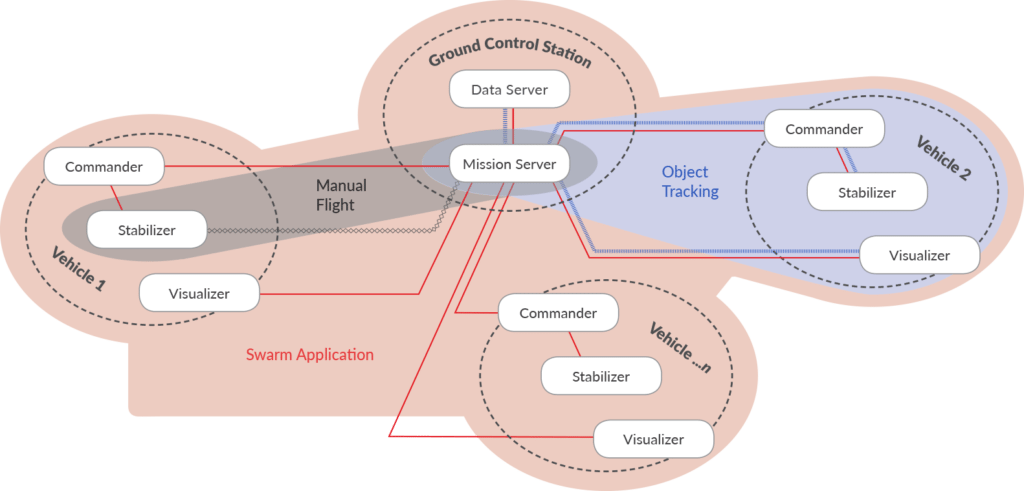

Autonomous Vehicles Research Studio Software Architecture

Autonomous Vehicles Research Studio Software Architecture

The demo also perfectly illustrates the vision capabilities of the QDrone. Not only you get the on-board image acquisition, but the on-board image processing as well. The visualizer model on the QDrone captures what the drone sees and saves it, after streaming it back to the ground control station PC. It also processes the image to find information in it, saving the processed images as well. And on top, it sends the extracted key information from the image to the mission server. And it all happens in real time, on-board the flying QDrone using stabilization and position control algorithms.

Another feature that made the quick implementation possible was the communication architecture of the Autonomous Vehicles Research Studio. You have the mission server talking to the QDrone’s stabilization algorithm and vision algorithm, all using WiFi. You also have the mission server talking to the localization system and interfacing with the joystick.

What’s next

The UIW researchers prepared the demo as an indoor proof of concept. Once they take it outside, they will have to modify the application to some extent. With no WiFi available, they could, for example, transfer the mission server and trajectory generator algorithms onto the QDrone, so it generates its own information. That would include having its Visualizer and Commander modules exchange information directly as well.

Flying outside, the UIW team would also have to integrate a GPS instead of the indoor camera localization system. But from the QDrone’s perspective, it does not matter where the position information comes from – a localization system or an on-board GPS.

Another key issue would be the image and video storage. The QDrone has a 4MB on-board memory, which is great when communicating with the ground PC. Moving outdoors, the team would need to consider adding an external hard disk drive (via the micro-USB port on the Intel Aero Compute board) or a microSD card (also available on the Intel Aero Compute board) for additional storage capacity. That way, all the raw videos could be saved on the QDrone itself and retrieved later.

We are looking forward to seeing how the UIW team takes this research to the next phase!