When trying to finish your AI research faster and more cost-effectively, the GPU is your new best friend. Most gamers know how critical a good graphics card or graphical processing unit (GPU) is to the enjoyment of a video game. This is due to smoother animations and higher framerates. Most modern games and virtual simulation software (including our very own Quanser Interactive Labs) include complex pixel shading. This allows for nicer textures and more natural-looking light but requires better GPUs for rendering. However, most people don’t know that GPUs are used for more than just video games. In this blog, I’ll aim to tackle the question of why GPUs matter. I will also look at why we’ve integrated one into Quanser’s self-driving car and ship high-end GPUs with our Autonomous Vehicle Research and Self-Driving Car Research Studios. By the end of this blog, I hope to inspire you with just a few new things you didn’t realize you could do with your GPU and the opportunities it presents in research.

If you have recently watched Pixar or DreamWorks animations, the beautiful renderings are a direct result of super high-powered GPUs. Ever had a CT or MRI scan done? There are a plethora of GPUs working on analyzing all sorts of information from those scans. Even using YouTube on your phone in a small window, while simultaneously scrolling this blog, uses the power of the GPU. Once used only for graphics-related tasks, GPUs are now heavily used in a wide range of tasks including forefront research and computing. If these systems are so widely used today, this begs the question,

What is a GPU and how does it work?

A GPU is a single processing unit that is composed of many microprocessors called “cores”. These cores allow single instructions to be run on multiple data inputs at the same time, speeding up the computation time. In general, the more cores, the more parallel processes you can run. GPUs are more efficient than general-purpose central processing units (CPUs) since CPUs only typically execute one instruction at a time on one data input. Even more modern CPUs that can run single instructions on multiple data inputs are bottlenecked by a lack of cores to be able to process that data quickly and efficiently. Each of the cores on a GPU or CPU allow for more simultaneous byte operations, GPUs just allow for more parallel processing power since they contain more cores.

Since GPUs make easy work out of massive distributed computational processes, the more data running through the same repetitive tasks, the more important the GPU becomes. In machine learning, this could be the difference between your neural network training in a few minutes or hours, to training in days, weeks, or years. This cost adds up fast when it comes to finishing your research efficiently. GPUs can be present on a video card, embedded into a motherboard, or in the integrated circuit for the CPU. This makes them not only powerful, but highly versatile, which leads us to the next question,

Why have GPUs only recently become so popular if they are this useful?

Before 2007, users were expected to understand specialized GPU-only languages like OpenGL and Direct3D which were impractical to learn. This created a barrier to entry for GPUs in machine learning and deep learning tasks. However, in 2007, NVIDIA launched the CUDA framework, which was designed to work with C, C++, and Fortran and provided users with an API. These changes lowered the barrier of entry and opened the market to a wider variety of programmers. This was a game changer that set in motion the promise for advancement in machine learning.

In 2009 a Stanford research paper by Raina et al. helped standardize the use of GPUs for machine learning and deep learning due to their speed in training models. Since then, as the power of machine learning and deep learning becomes more recognized and important in our society and technology, GPUs become more important for us to understand and value.

The role of the GPU in our Studios and QLabs

Here at Quanser, we look to build highly moldable embedded platforms at the cutting-edge of research and teaching to meet our academic communities needs. As we build hardware to support smarter, more complex systems, GPU hardware has become more essential. This is why our research and teaching studios are outfitted to meet these needs with ease.

Our Self-Driving Car and Autonomous Vehicle Research Studios come with specialized high-performance computers that contain all the software and hardware necessary for jump-starting your research in your space. The NVIDIA GeForce RTX series has been our GPU series of choice due to its stunning capabilities. NVIDIA was the mother of the first dedicated GPU in 1999 and has been a leader since. RTX is a technology that NVIDIA pioneered for ray-tracing and has domineered the space since. Ray-tracing is a way of modelling the physical behavior of light. This GPU enables our QLabs to do real-time dynamic lighting.

This allows researchers to study how lighting conditions, reflections and camera lenses will affect autonomous driving to improve models before implementation on hardware. The GPU is an essential part of real-time twinning. Everything from the camera lens view, lighting, depth viewing, detail and more comes from the GPU.

From training your machine learning and deep learning models, to testing your hardware effortlessly in our virtual QLabs, this GPU can tackle it all.

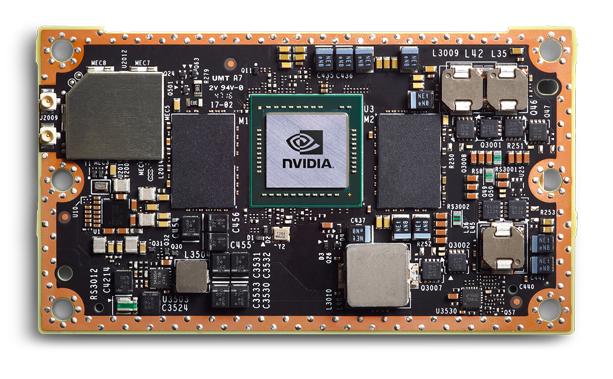

The role of the GPU in the QCar

When choosing a GPU for the QCar, the NVIDIA Jetson TX2 was the top choice by far. The Jetson line of supercomputers is well known and reputable. With high computational power, packed into a design smaller than a credit card in size, this choice was easy. This supercomputer boasts 256 Cuda Cores with an NVIDIA Pascal GPU architecture. It also features a dual-core NVIDIA Denver 2 64-Bit CPU along with a Quad-Core ARM® Cortex®-A57 MP Core CPU. This little module is a beast for its buck.

This integrated GPU allows for endless possibilities of research and teaching opportunities to make the QCar your own. With faster video rendering for real-time, it can reduce glitches and stuttering. Using SLAM, you can map your spaces quicker. When training or deploying models on the car, the onboard GPU is your best friend for efficiency. The GPUs opportunities are truly endless.

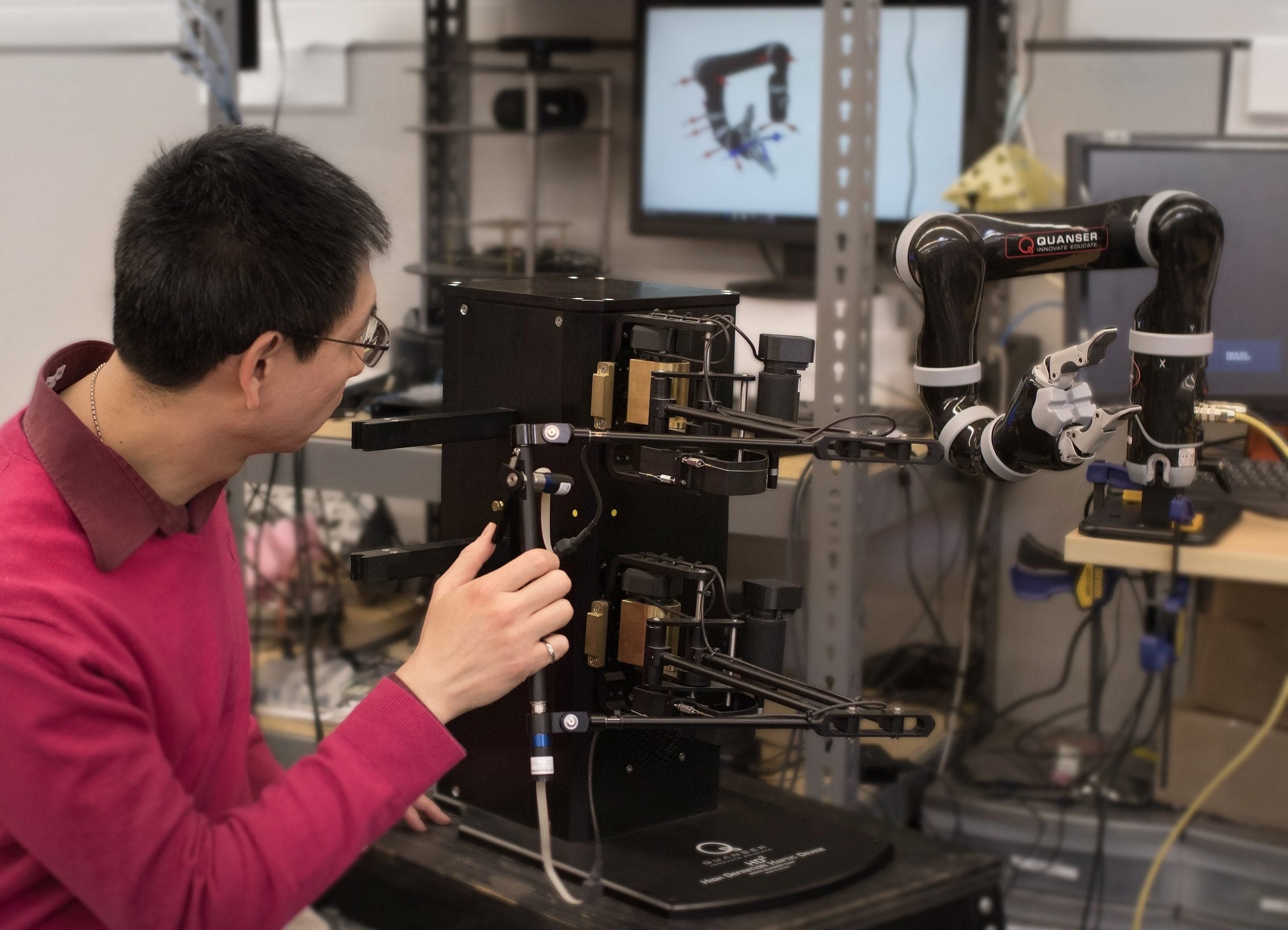

Check out the cool research that York University is doing running trained game-theory reinforcement learning models on QCar GPUs at uncontrolled intersections below:

Are you using your GPU?

The GPU is a powerful beast that speeds up your projects by enabling repetitive computational tasks to take a fraction of the time. If you aren’t currently reaping the benefits, I hope now you will understand the important role GPUs play, and be inspired to use the GPU in the QCar, in our studio suites, or your own.

Click here to check out one of our related webinars on getting started with Developing AI Applications with TensorFlow and MATLAB® Deep Learning ToolBox™. This video touches on training your models using the GPU to get you started.

Alternatively, check out this video below on How to Teach Artificial Intelligence. Here our COO Paul Karam and R&D Manager Murtaza Bohra talk about the Jetson TX2 on the QCar and the vision for the future of Quanser in the space of GPU integration into our arial and ground robotics!